- Unity User Manual (2017.2)

- XR

- VR 设备

- HoloLens

- Unity Google VR 视频异步重投影 (Video Async Reprojection)

Unity Google VR 视频异步重投影 (Video Async Reprojection)

什么是视频异步重投影?

Async Reprojection Video is a layer (referred to as an “external surface”) that an application can use to feed video frames directly into the async reprojection system. The main advantages to using the API are:

1.如果没有该 API,则会对视频进行一次采样以渲染到应用程序的颜色缓冲区中,然后再次对颜色缓冲区进行采样以执行失真校正。这样就引入了双重采样瑕疵。外部表面将视频直接传递给 EDS 合成器,因此只对其采样一次,从而可提高视频的画质。

2.使用外部表面 API 时,视频帧率与应用程序帧率分离。应用程序可能需要 1 秒钟来渲染新帧,唯一的结果是用户在移动头部时会看到黑条,而视频将继续正常播放。这样应该可以显著减少丢弃的视频帧,并保持 AV 同步

3.应用程序可以标记自己希望播放 DRM 视频,然后 API 将创建一个受保护的路径来显示受保护的视频并维持异步重投影帧率。

已知问题:

1.使用视频异步重投影时,摄像机必须从原点 (0,0,0) 开始。如果摄像机的位置未设置为 0,0,0,可能会出现错误。

2.异步重投影没有可公开访问的 C# 接口。公共 API 仅限于 Java。

启用异步视频重投影

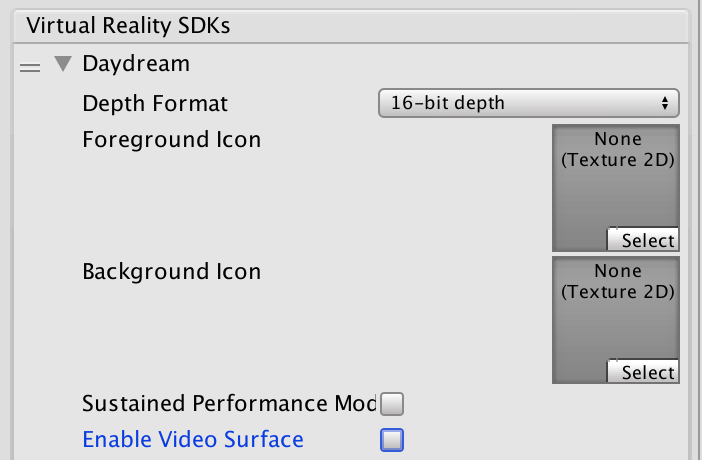

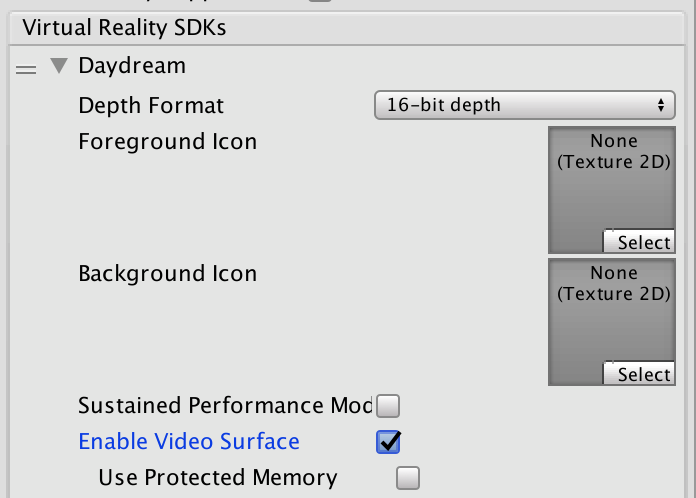

异步视频重投影是 Daydream VR 设备设置的一部分。

Click the grey arrow next to Daydream and then check the “Enable Video Surface” box to enable use of the Async Video Reprojection feature

Select the “Use Protected Memory”option ONLY if you require memory protection for all of your content as enabling this means that it is enabled for the lifetime of the application.

API 文档

To take advantage of the Google VR API you will need to extend the UnityPlayerActivity. For more information, see documentation on Extending the UnityPlayerActivity

Because Java plug-ins cannot directly access Objects in your scene, you will need to provide a simple API to your C# code that will allow you to pass a transform to the Java side as well as to tell your Java code when to start rendering.

Note: This code is not complete. It contains no implementation of a video player as that is a client specific implementation detail. It also doesn’t have any playback controls, which would have to be implemented as objects in the scene and actions on those objects would need to call into Java.

有关在 Unity 中使用 Java 和扩展 UnityPlayerActivity 的信息,请参阅关于在 Unity 中进行 Android 开发的文档。

For information about the Google Video Async Reprojection system, refer to the Android Developer Network documentation on Video Viewports.

Java sample code:

package com.unity3d.samplevideoplayer;

import com.unity3d.player.GoogleVrVideo;

import com.unity3d.player.GoogleVrApi;

import android.app.Activity;

import android.os.Bundle;

import android.util.Log;

import android.view.Surface;

public class GoogleAVRPlayer implements GoogleVrVideo.GoogleVrVideoCallbacks {

private static final String TAG = GoogleAVRPlayer.class.getSimpleName();

private MyOwnVideoPlayer videoPlayer;

private boolean canPlayVideo = false;

private boolean isSceneLoaded = false;

// API you present to your C# code to handle initialization of your

// video system.

public void initVideoPlayer(UnityPlayerActivity activity) {

// Initialize Video player and any other support you need…

// Register this instance as the Google Vr Video Listener to get

// lifetime and control callbacks.

GoogleVrVideo gvrv = GoogleVrApi.getGoogleVrVideo();

if (gvrv != null) gvrv.registerGoogleVrVideoListener(this);

}

// API you present to your C# code to start your video system

// playing a video.

public void play()

{

if (canPlayVideo && videoPlayer != null && videoPlayer.isPaused())

videoPlayer.play();

}

// API you present to your C# code to stop your video system

// playing a video

public void pause()

{

if (canPlayVideo && videoPlayer != null && !videoPlayer.isPaused())

videoPlayer.pause();

}

// Google Vr Video Listener

@Override

public void onSurfaceAvailable(Surface surface) {

// Google Vr has a surface available for you to render into.

// Use this surface with your video player as needed.

if (videoPlayer != null){

videoPlayer.setSurface(surface);

canPlayVideo = true;

if (isSceneLoaded)

{

videoPlayer.play();

}

}

}

@Override

public void onSurfaceUnavailable() {

// The Google Vr Video Surface is going away. You need to remove

// it from anything you have holding it and stop your video player.

if (videoPlayer != null){

videoPlayer.pause();

canPlayVideo = false;

}

}

@Override

public void onFrameAvailable() {

// Handle Google Vr frame available callback

}

}

Unity C# Sample code:

using System;

using System.Collections;

using System.Collections.Generic;

using System.Text;

using UnityEngine;

public class GoogleVRVideo : MonoBehaviour {

private AndroidJavaObject googleAvrPlayer = null;

private AndroidJavaObject googleVrVideo = null;

void Awake()

{

if (googleAvrPlayer == null)

{

googleAvrPlayer = new AndroidJavaObject("com.unity3d.samplevideoplayer.GoogleAVRPlayer");

}

AndroidJavaObject googleVrApi = new AndroidJavaClass("com.unity3d.player.GoogleVrApi");

if (googleVrApi != null) googleVrVideo = googleVrApi.CallStatic<AndroidJavaObject>("getGoogleVrVideo");

}

void Start()

{

if (googleVrVideo != null)

{

// We need to tell Google VR the location of the video suface in

// world space. Since there isn't a way to get at that info from

// Java, we can do it here and then pass the calculated matrix

// down to the api we expose on our UnityPlayerActivity subclass.

Matrix4x4 wm = transform.localToWorldMatrix;

wm = Camera.main.transform.parent.worldToLocalMatrix * wm;

wm = wm * Matrix4x4.Scale(new Vector3(0.5f, 0.5f, 1));

// Convert 4x4 Row Ordered matrix into a 16 element column ordered

// flat array. The transposition is to make sure that the matrix is

// in the order that Google uses and the we flatten it to make passing

// over the JNI boundary easier. The complication being that you have to

// then convert it back to an 4x4 matrix on the Java side.

float[] matrix = new float[16];

for (int i = 0; i < 4; i++)

{

for (int j = 0; j < 4; j++)

{

matrix[i * 4 + j] = wm[j,i];

}

}

googleVrVideo.Call("setVideoLocationTransform", matrix);

}

if (googleAvrPlayer != null)

{

AndroidJavaClass jc = new AndroidJavaClass("com.unity3d.player.UnityPlayer");

AndroidJavaObject jo = jc.GetStatic<AndroidJavaObject>("currentActivity");

googleAvrPlayer.Call("initVideoPlayer", jo);

googleAvrPlayer.Call("play");

}

}

}