Manual

- Unity User Manual (2017.2)

- Trabajando en Unity

- Bases de Unity

- Flujo de trabajo de los Assets (Asset Workflow)

- Las ventanas principales

- Creación del Gameplay

- Características del Editor

- Ajustes del Modo 2D y 3D

- Preferencias

- Build Settings

- Las Configuraciones de Administradores(Settings Managers)

- Audio Manager (Administrador de Audio)

- Configuraciones del Editor

- Input Manager (Administrador de Input)

- Network Manager

- Physics Manager

- Player Settings

- Ajustes de calidad (Quality Settings)

- Graphics Settings

- Ajustes del Orden de Ejecución de Scripts(Script Execution Order Settings)

- Tags (etiquetas) y Layers (capas)

- El Administrador de Tiempo(Time Manager)

- Emulación de Red

- Integración Visual Studio C#

- Integración con RenderDoc

- Analiticas del Editor

- Buscar Actualizaciones

- IME en Unity

- Nombres de carpetas de especiales

- Exportando paquetes

- Control de Versiones

- Solución de Problemas del Editor

- Desarrollo Avanzado

- Temas Avanzados del Editor

- Licencias y Activación

- Guías de Actualización

- 2D

- Experiencia de Juego en 2D

- Sprites

- Tilemap

- Physics Reference 2D

- Gráficos

- Información General de las Gráficas (Graphics Overview)

- Iluminación (Lighting)

- Visión general de iluminación

- Lighting Window

- Light Explorer

- Fuentes de Luz

- Shadows (Sombras)

- Modos de iluminación

- Global Illumination (Iluminación Global)

- Light troubleshooting and performance

- Temas relacionados

- Cámaras

- Materiales, Shaders y Texturas

- Texturas 2D

- Creando y utilizando Materiales

- Standard Shader

- Accediendo y Modificando los parámetros del Material vía script

- Sombreadores(Shaders) con Funciones Fijas

- Legacy Shaders (Shaders de Legado)

- Descripción general de video

- El Motor del Terreno

- Editor de Árboles

- Sistema de Partículas

- Visión general del post-procesamiento

- Reflection probes

- Cluster Rendering

- Características Avanzadas de Renderización

- Renderizado HDR(Imágenes de Alto Rango Dinámico) en Unity

- Rendering Paths

- Level of Detail (LOD)

- DirectX 11 and OpenGL Core

- Compute shaders

- Buffers de comando de gráficos (buffers de comando de gráficos)

- GPU instancing

- Sparse Textures (Texturas Dispersas)

- Emulación y capacidades gráficas del hardware

- CullingGroup API

- Subida Asincrónica de Texturas

- Procedural Materials

- Procedural Mesh Geometry

- Optimizando el Rendimiento Gráfico

- Capas (Layers)

- Iluminación (Lighting)

- Referencia de Gráficas(Graphics Reference)

- Referencia de Cámaras

- Referencia del Shader

- Writing Surface Shaders

- Escribiendo Vertex y fragment shaders

- Ejemplos del Vertex y Fragment Shader

- Semánticas de Shader

- Accediendo propiedades shader en Cg/HLSL

- Proporcionar datos del vértice a programas vertex

- El Sombreador integrado incluye archivos

- Macros del preprocesador Shader predefinidas

- Funciones Shader integradas de ayuda

- Variables shader integradas

- Creando varias variants de programas shader

- GLSL Shader programs

- Lenguaje Shading utilizado en Unity

- Niveles Objetivo de Compilación Shader

- Tipos de dato Shader y precisión

- Using sampler states

- Sintaxis ShaderLab

- Assets Shader

- Temas Avanzados de ShaderLab

- Pipeline de Rendering de Unity

- Consejos de rendimiento al escribir shaders

- Rendering con Shaders Remplazados

- Custom Shader GUI (GUI Shader Personalizado)

- Utilizando Depth Textures (Texturas de profundidad)

- La Depth Texture (Textura de Profundidad) de la cámara

- Diferencias especificas de rendering por plataforma

- Shader Level of Detail (Nivel de detalle)

- Texture arrays (arreglos de Textura)

- Depurando shaders DirectX 11 con Visual Studio

- Implementando la Función Fija TexGen en Shaders

- Particle Systems reference

- Particle System

- Módulos del Sistema de Partículas

- Particle System Main module

- Emission module

- Particle System Shape Module

- Limit Velocity Over Lifetime module

- Noise module

- Limit Velocity Over Lifetime module

- Inherit Velocity module

- Force Over Lifetime module

- Color Over Lifetime module

- Color By Speed module

- Módulo Size Over Lifetime

- Módulo Size By Speed

- Rotation Over Lifetime module

- Rotation By Speed module

- External Forces module

- Collision module

- Módulo Triggers

- Sub Emitters module

- Texture Sheet Animation module

- Lights module

- Trails module

- Módulo de datos personalizados

- Renderer module

- Particle Systems (Legacy, antes del lanzamiento de 3.5)

- Referencia de efectos visuales

- Componentes Mesh

- Componentes de Textura

- Componentes de Renderización

- Detalles del Rendering Pipeline

- Sprite Renderer

- Los Cómos de las Gráficas (Graphics HOWTOs)

- Cómo importar Texturas Alpha?

- Cómo hago una Skybox?

- Cómo creo un Mesh Particle Emitter? (Legacy Particle System)

- Cómo creo una Spot Light Cookie?

- Cómo arreglo la rotación de un modelo importado?

- Agua en Unity

- Art Asset best practice guide

- Cómo importo modelos de mi aplicación 3D?

- Cómo realizar un renderizado Estereoscópico

- Tutoriales de Gráficas

- Información General de las Gráficas (Graphics Overview)

- Física

- Scripting

- Visión General de Scripting

- Creando y usando scripts

- Variables y el Inspector

- Controlar GameObjects utilizando componentes

- Event Functions (Funciones de Evento)

- Administrador del Tiempo y Framerate

- Creando y destruyendo GameObjects.

- Coroutines (corrutinas)

- Namespaces

- Atributos

- Execution Order of Event Functions (Orden de Ejecución de Funciones de Evento)

- Entender la Gestión Automática de Memoria

- Compilación dependiente de la plataforma

- Carpetas especiales y orden de compilación script

- Funciones genéricas

- Scripting restrictions

- Script Serialization

- UnityEvents (Eventos de Unity)

- Qué es una Null Reference Exception? (Excepción con Referencia Null)

- Importando Clases

- "Recetas" para usar Vectores

- Herramientas de Scripting

- EventSystem (Sistema de Eventos)

- Messaging System (Sistema de Mensajería)

- Input Modules (Módulos de Input)

- Eventos Soportados

- Raycasters

- Referencia al Event System (Sistema de Eventos)

- Visión General de Scripting

- Multijugador y Networking (redes)

- Visión general de Networking (redes)

- El High Level API (API de Alto Nivel)

- Conceptos del Sistema de Red

- Configurando un proyecto Multijugador desde el principio

- Utilizando el NetworkManager (Administrador de red)

- Generación (spawning) de objetos

- Funciones Personalizadas de Generación (Spawn)

- Sincronización de Estados

- Acciones Remotas

- Objetos Jugador

- Visibilidad del objeto

- Network Manager callbacks

- Callbacks del NetworkBehaviour

- Mensajes en Red

- Local Discovery

- Objetos de Escena

- Converting a single-player game to Unity Multiplayer

- Multiplayer Lobby

- Network Clients (clientes de red) y Servers (servidores)

- Host Migration (Migración de anfitrión)

- Utilizando el API del Transport Layer

- Configurando Unity Multiplayer

- Recomendaciones de Networking en Dispositivos Móviles.

- UnityWebRequest

- El High Level API (API de Alto Nivel)

- Referencia al Networking (Red)

- NetworkAnimator

- NetworkBehaviour

- NetworkClient

- NetworkConnection

- NetworkDiscovery

- NetworkIdentity

- Network Lobby Manager

- Network Lobby Player

- NetworkManager

- Network Manager HUD

- Network Proximity Checker

- NetworkReader

- NetworkServer

- NetworkStartPosition

- NetworkTransform

- NetworkTransformChild

- NetworkTransformVisualizer

- NetworkTransport

- NetworkWriter

- Visión general de Networking (redes)

- Audio

- Vista General del Audio

- Archivos de Audio

- Tracker Modules

- Audio Mixer (Mezclador de Audio)

- Plugin SDK del Audio Nativo de Unity

- Audio Profiler (Perfilador de Audio)

- Ambisonic Audio

- Referencias de Audio

- Audio Clip

- Audio Listener

- Audio Source (Fuente de Audio)

- Audio Mixer (Mezclador de Audio)

- Audio Filters (Filtros de Audio)

- Audio Effects (Efectos de audio)

- Audio Low Pass Effect

- Audio High Pass Effect

- Audio Echo Effect

- Audio Flange Effect

- Audio Distortion Effect

- Audio Normalize Effect

- Audio Parametric Equalizer Effect

- Audio Pitch Shifter Effect

- Audio Chorus Effect

- Audio Compressor Effect

- Audio SFX Reverb Effect

- Audio Low Pass Simple Effect

- Audio High Pass Simple Effect

- Reverb Zones (Zonas de reverberación)

- Micrófono

- Configuraciones de Audio

- Animación

- Visión general del Sistema de Animación

- Clips de Animación (Animation Clips)

- Animaciones de fuentes externas

- Trabajando con animaciones humanoides

- Perparación del Asset e Importación

- Non-humanoid Animations

- Dividiendo Animaciones

- Haciendo un bucle de sus clips de animación

- Masking Clips Importados

- Curvas de Animación en Clips Importados

- Animation events on imported clips

- Seleccionando un Root Motion Node

- Importar Curvas Euler

- Guía de la ventana de animación

- Animaciones de fuentes externas

- Animator Controllers

- El Asset del Animator Controller

- La Ventana Animator

- Animation State Machines (Máquinas de Estado de Animación)

- Lo básico de los Estados de Maquina

- Parámetros de animación

- Transiciones de State Machine (Estados de Maquina)

- State Machine Behaviours (Comportamientos de Maquinas de Estado)

- Sub-State Machines (Sub-Estados de Maquina)

- Capas de Animación

- Funcionalidad "Solo" y "Mute"

- Target Matching (Haciendo que coincida con un objetivo)

- Inverse Kinematics (Cinemática Inversa)

- Root Motion - Cómo funciona

- Blend Trees (Árboles de Mezcla)

- Animation Blend Shapes

- Animator Override Controllers

- Retargeting de Animaciones Humanoides

- El Rendimiento y Optimización

- Referencia de Animación

- Animación CÓMOS

- Playables API

- Un Glosario de términos de Animación

- Timeline

- Timeline overview

- Flujos de trabajo de Timeline

- Timeline Editor window

- Timeline Preview and Timeline Selector

- Timeline Playback Controls

- Track List

- Vista de los Clips

- Navigating the Clips view

- Agregando clips

- Selecting clips

- Positioning clips

- Tiling clips

- Duplicando clips

- Trimming clips

- Splitting clips

- Resetting clips

- Cambiar de la velocidad de reproducción del clip

- Configurando una extrapolación de una brecha

- Easing-in y Easing-out clips (facilitando la entrada y salida de clips)

- Fusionando clips

- Vista de Curvas

- Configuración de Timeline

- The Timeline Inspector

- Playable Director component

- Glosario de Timeline

- UI (Interfaz de Usuario)

- Canvas

- Diseño Básico

- Componentes Visuales

- Componentes de Interacción

- Integración de Animación

- Diseño Automático

- Rich Text (Texto Enriquecido)

- Referencia UI

- Los cómos del UI

- Immediate Mode GUI (IMGUI)

- Navegación y Pathfinding (Búsqueda de Caminos)

- Visión General de la Navegación

- Sistema de Navegación de Unity

- Trabajos interiores del Sistema de Navegación

- Construyendo un NavMesh

- Componentes de construcción del NavMesh

- Ajustes Bake Avanzados del NavMesh

- Creando un Agente NavMesh

- Creando un NavMesh Obstacle (Obstáculo NavMesh)

- Creando un Off-mesh Link (Enlace Off-mesh)

- Construyendo Off-Mesh Links (Enlaces Off-mesh) Automáticamente

- Construyendo un Height Mesh (Mesh de altura) para una colocación precisa del personaje

- Áreas de Navegación y Costos

- Cargar Múltiples NavMeshes utilizando el Additive Loading

- Utilizando el NavMesh Agent con otros componentes

- Navigation Reference

- Los 'Cómos' de la Navegación

- Visión General de la Navegación

- Unity Services & Dashboard

- Configurando su proyecto para Unity Services

- Unity Ads

- Unity Analytics

- Unity Analytics Overview

- Configurando Analytics

- Analytics Dashboard

- Eventos Personalizados

- Funnels

- Configuración remota

- Monetization (Monetización)

- User Attributes (Atributos de Usuario)

- Unity Analytics Raw Data Export (Exportación de datos sin procesar)

- Restablecimiento de datos

- Actualizando Unity Analytics

- COPPA Compliance

- Métricas, segmentos y terminología de Analytics

- Unity Cloud Build

- Unity IAP

- Configurando el Unity IAP

- Guia Multi Plataforma

- Codeless IAP

- Definiendo productos

- Inicialización

- Mirando Metadata del Producto

- Iniciando Compras

- Procesando Compras

- Manejando fallas en las compras

- Recuperando Transacciones

- Recibos de Compra

- Validación de Recibo

- Extensiones de la Store (tienda)

- Cross-store installation issues with Android in-app purchase stores

- Guías de Tiendas

- Implementando una Tienda

- Unity Collaborate

- Configurando Unity Collaborate

- Agregar miembros del equipo a su Proyecto de Unity

- Ver el historial

- Habilitando Cloud Build con Collaborate

- Actualizando con Unity Collaborate

- Revirtiendo archivos

- Resolviendo conflictos de archivo

- Ignore files

- Partial Publish

- Rollback

- In-Progress

- Consejos para solucionar problemas de Collaborate

- Unity Performance Reporting (Reporte de Rendimiento de Unity)

- Servicios Multiplayer

- Transferring Projects

- XR

- Repositorios Open-source

- Cómo contribuir a Unity

- Paso 1: Obtener una cuenta de Bitbucket

- Paso 2: Fork el repositorio al cual usted quiere contribuir

- Step 3: Clone Su Fork

- Paso 4: Aplique las modificaciones a su fork

- Paso 5: Abra una solicitud Pull en Bitbucket

- Paso 6: Espere al Feedback (retroalimentación)

- Lecturas Adicionales

- FAQ (Preguntas más frecuentes)

- Cómo contribuir a Unity

- Publicando en la Asset Store

- Indicaciones Específicas de Plataforma

- Standalone

- Apple Mac

- Apple TV

- WebGL

- WebGL Player Settings

- Empezar con el desarrollo de WebGL

- Compatibilidad del navegador con WebGL

- Building and running a WebGL project

- WebGL: Deploying compressed builds

- Depuración y la resolución de problemas de construcciones WebGL

- Gráficas WebGL

- WebGL Networking (redes)

- Utilizando Audio en WebGL

- Consideraciones de rendimiento WebGL

- Consideraciones de Memoria cuando tenga como objetivo WebGL

- WebGL: Interacting with browser scripting

- Utilizando plantillas WebGL

- Bloqueo del cursor y modo de pantalla completa en WebGL

- Input en WebGL

- iOS

- Iniciando con desarrollo iOS

- iOS Player Settings

- iOS 2D Texture Overrides

- Actualizando a 64 bit iOS

- Temas Avanzados de iOS

- Características actualmente no soportadas por Unity iOS.

- Troubleshooting en dispositivos iOS

- Reportando bugs de falla en iOS

- Android

- Empezar con el desarrollo de Android

- Configuración del Android SDK/NDK

- Unity Remote

- Android Remote (OBSOLETO)

- Troubleshooting Android development

- Inside the Android build process

- Reporting crash bugs under Android

- Support for APK expansion files (OBB)

- Scripting para Android

- Construyendo y utilizando plug-ins para Android

- Personalizando una Pantalla de Bienvenida (Splash Screen) en Android

- Un Solo Pase de Stereo Rendering para Android

- Ajustes del Android Player

- Android 2D Textures Overrides

- Gradle for Android

- Android Manifest

- Empezar con el desarrollo de Android

- Samsung TV

- Tizen

- Windows

- Windows General

- Universal Windows Platform

- Cómo empezar

- Universal Windows Platform: Deployment

- Universal Windows Platform: Profiler

- Universal Windows Platform: Command line arguments

- Universal Windows Platform: Association launching

- Clase AppCallbacks

- Universal Windows Platform: WinRT API in C# scripts

- Universal Windows Platform Player Settings

- Backends de Scripting (programación)

- FAQ (Preguntas más frecuentes)

- Universal Windows Platform: Ejemplos

- Universal Windows Platform: Code snippets

- Known issues

- Windows Holographic

- Web Player (reproductor web)

- Lista de Verificación para Desarrolladores en Móviles

- Experimental

- Tópicos de legado

- Windows Store: Windows SDKs

- Servidor de Assets (Recursos) (Licencia de Equipo)

- Guía Legacy de referencial del Network

- Conceptos de Networking de Alto Nivel (Legacy -Depreciado)

- Elementos de redes en Unity (Legacy)

- Network Views (Legacy)

- Detalles RPC (Legacy)

- State Synchronization Details (Legacy)

- Network Instantiate (Legacy)

- Network Level Loading (Legacy)

- Master Server (Legacy)

- Minimizando el Ancho de Banda de la Red (Legacy)

- Asset Bundles Legacy (Viejos)

- Sistema de animación anterior.

- GUI Anterior

- Legacy Unity Analytics (Flujo de trabajo SDK)

- LegacyUnityRemote (UnityRemote legacy)

- Guía de mejores prácticas

- Expert guides

- Nuevo en Unity 2017.2

- Unity User Manual (2017.2)

- Indicaciones Específicas de Plataforma

- Windows

- Windows Holographic

- Holographic Emulation

Holographic Emulation

Holographic Emulation allows you to prototype, debug, and run Microsoft HoloLens projects directly in the Unity Editor rather than building and running the game every time you want to see the effect of a change. This vastly reduces time between iterations when developing holographic applications in Unity.

Holographic Emulation has two different modes:

Remote to Device: Using a connection to a Windows Holographic device, your application behaves as if it were deployed to that device, while really running in the Unity Editor on your host machine. See Remote to Device, below, for more information.

Simulate in Editor: Your application runs on a simulated Holographic device directly in the Unity Editor, with no connection to a real-world Windows Holographic device. See Simulate in Editor, below, for more information.

Holographic Emulation is supported on any machine running Windows 10 (with the Anniversary update) or later versions.

Empezando

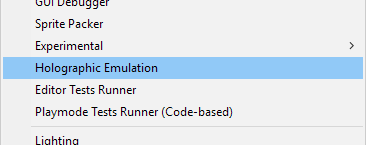

To enable remoting or simulation, open the Unity Editor and go to Window > Holographic Emulation:

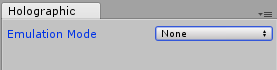

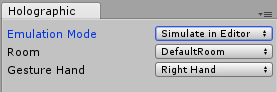

This opens the Holographic Emulation control window, containing the Emulation Mode drop-down menu. Keep this window visible during development; you need to be able to access its settings when you launch your application.

Emulation Mode is set to None by default, which means that your application runs in the Editor without any of the Holographic API functionality. Change the Emulation Mode to Remote to Device or Simulate in Editor to enable that mode and begin Holographic Emulation.

Remote to Device

To use this, connect your machine running the Unity Editor (the “host machine”) to a Windows Holographic device (the “device”) (see Connecting your device, below). Your application behaves as if it were deployed to that device, while really running in the Unity Editor on the host machine.

The Unity Editor has access to spatial sensor data and head tracking of the connected device. The Unity Editor Game View allows you to see what is being rendered on the device but not what the wearer of the device sees of the real world. (See an example in the image above titled ‘Holopgraphic Emulation: The Unity Editor runs as a hologrpahic application’.)

Note that Remote to Device isn’t useful for validating performance because your application is running on the host machine, rather than the device itself, but it is a quick way to test development.

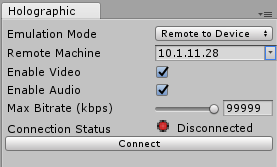

To enable this mode in the Unity Editor, set the Emulation Mode to Remote to Device. The interface changes to reflect the additional options available.

Connecting your device

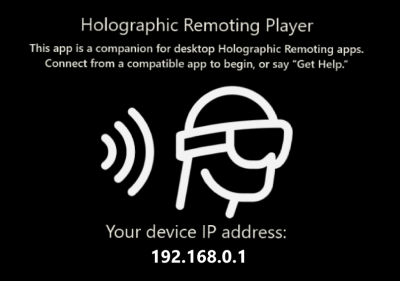

Install and run the Remoting Player, available from the Windows Store. When launched, the Remoting Player enters a waiting state and displays the IP address of the device on the Holographic Remoting Player screen. Find additional information about the Remoting Player, including how to enable connection diagnostics on the Microsoft Windows Dev Center.

In the Holographic Emulation control window in the Unity Editor, enter the IP address of your device into the Remote Machine field. The drop-down button to the right of the field allows you to select recently used addresses.

Press the Connect button. The connection status changes to a green light with a connected message.

Now click Play in the Unity Editor to run your device remotely. You can pause, inspect GameObjects, and debug in the same way as running an app in the Editor; but with video, audio, and device input transmitted back and forth across the network between the host machine and the device.

Known limitations

Speech (PhraseRecognizer) is not supported via Remote to Device; instead, it intercepts speech from the host machine running the Unity Editor.

While Remote to Device is running, all audio on the host machine redirects to the device, including audio from outside your application.

Simulate in Editor

Using this, your application runs on a simulated Holographic device directly in the Unity Editor with no connection to a real-world Windows Holographic device. This is useful for development for Windows Holographic if you don’t have access to a device. (Note that you do need to test on a device and not rely on the Emulator.)

To enable this mode, set the Emulation Mode to Simulate in Editor and press the Play button: Your application starts in a emulator built into the Unity Editor.

In the Holographic control panel, use the Room drop-down menu to choose from one of five virtual rooms (the same as those supplied with the XDE HoloLens Emulator), and use the Gesture Hand drop-down menu to specify which virtual hand (left or right) performs gestures.

In Simulate in Editor mode, you need to use a game controller (such as an Xbox 360 or Xbox One controller) to control the virtual human player. If you don’t have a controller, the simulation still works, but you cannot move the virtual human player around.

| Control: | Función: |

|---|---|

| Left stick | Up and down to move virtual human player backward and forward; left and right to move left and right. |

| Right stick | Up and down to pitch virtual human player’s head up and down; left and right to turn virtual human player left and right. |

| D-pad | Move the virtual human player up and down or roll head left and right. |

| Left and right trigger buttons; A button | Perform a tap gesture with a virtual hand. |

| Y button | Reset the pitch and roll of the virtual human player’s head. |

To use the game controller, the Unity Editor’s focus must be on the Game view. Click the Game view window once after doing anything else with the UI to return the focus to it.

Known limitations

Most game controllers work with Simulate in Editor mode, as long as they are recognised by Windows and Unity; however, there may be some incompatibility with unsupported controllers.

You can use PhotoCapture in Simulate in Editor mode, but because there is no device present, you need to do this with your own local camera (such as an attached webcam). This means you cannot retrieve a matrix with

TryGetProjectionMatrixorTryGetCameraToWorldMatrix, because a normal local camera cannot compute where it is in relation to the real world.