Unity Manual

- Unity User Manual 2021.3 (LTS)

- New in Unity 2021 LTS

- Packages and feature sets

- Released packages

- 2D Animation

- 2D Aseprite Importer

- 2D Pixel Perfect

- 2D PSD Importer

- 2D SpriteShape

- 2D Tilemap Extras

- Adaptive Performance

- Addressables

- Ads Mediation

- Advertisement Legacy

- Alembic

- Analytics

- Android Logcat

- Animation Rigging

- Apple ARKit Face Tracking XR Plugin

- Apple ARKit XR Plugin

- AR Foundation

- Authentication

- Burst

- CCD Management

- Cinemachine

- Cloud Code

- Cloud Save

- Cloud Services Apis

- Code Coverage

- Collections

- Deployment

- Economy

- Editor Coroutines

- FBX Exporter

- Friends

- Google ARCore XR Plugin

- In App Purchasing

- Input System

- iOS 14 Advertising Support

- JetBrains Rider Editor

- Leaderboards

- Live Capture

- Lobby

- Localization

- Magic Leap XR Plugin

- Matchmaker

- Mathematics

- Microsoft GDK API

- Microsoft GDK Tools

- ML Agents

- Mobile Notifications

- Moderation

- Multiplay

- Multiplayer Tools

- Netcode for GameObjects

- Oculus XR Plugin

- OpenXR Plugin

- Player Accounts

- Polybrush

- Post Processing

- ProBuilder

- Profile Analyzer

- Push Notifications

- Recorder

- Relay

- Remote Config

- Scriptable Build Pipeline

- Sequences

- Services Tooling

- Sysroot Base

- Sysroot Linux x64

- Terrain Tools

- Test Framework

- TextMeshPro

- Timeline

- Toolchain Linux x64

- Toolchain MacOS Linux x64

- Toolchain Win Linux x64

- Tutorial Authoring Tools

- Tutorial Framework

- Unity Profiling Core API

- Unity Transport

- User Generated Content

- User Generated Content Bridge

- Version Control

- Visual Scripting

- Visual Studio Code Editor

- Visual Studio Editor

- Vivox

- WebGL Publisher

- XR Hands

- XR Interaction Toolkit

- XR Plugin Management

- Release Candidates

- Pre-release packages

- Core packages

- Built-in packages

- AI

- Android JNI

- Animation

- Asset Bundle

- Audio

- Cloth

- Director

- Image Conversion

- IMGUI

- JSONSerialize

- NVIDIA

- Particle System

- Physics

- Physics 2D

- Screen Capture

- Terrain

- Terrain Physics

- Tilemap

- UI

- UIElements

- Umbra

- Unity Analytics

- Unity Web Request

- Unity Web Request Asset Bundle

- Unity Web Request Audio

- Unity Web Request Texture

- Unity Web Request WWW

- Vehicles

- Video

- VR

- Wind

- XR

- Experimental packages

- Packages by keywords

- Unity's Package Manager

- How Unity works with packages

- Concepts

- Configuration

- Package Manager window

- Access the Package Manager window

- List view

- Details view

- Features (detail) view

- Finding packages and feature sets

- Add and remove UPM packages or feature sets

- Install a feature set from the Unity registry

- Install a UPM package from a registry

- Install a UPM package from the Asset Store

- Install a UPM package from a local folder

- Install a UPM package from a local tarball file

- Install a UPM package from a Git URL

- Install a UPM package by name

- Remove a UPM package from a project

- Switch to another version of a UPM package

- Add and remove asset packages

- Disable a built-in package

- Finding package documentation

- Inspecting packages

- Scripting API for packages

- Scoped registries

- Resolution and conflict

- Project manifest

- Troubleshooting

- Creating custom packages

- Feature sets

- Released packages

- Working in Unity

- Installing Unity

- System requirements for Unity 2021 LTS

- Install Unity using installer files

- Install Unity using Download Assistant

- Installing Unity from the command line

- Install Unity on offline computers

- Licenses and activation

- 2D or 3D projects

- Upgrading Unity

- Unity's interface

- Quickstart guides

- Advanced best practice guides

- Create Gameplay

- Editor Features

- Analysis

- Memory in Unity

- Profiler overview

- Profiling your application

- Common Profiler markers

- The Profiler window

- Asset Loading Profiler module

- Audio Profiler module

- CPU Usage Profiler module

- File Access Profiler module

- Global Illumination Profiler module

- GPU Usage Profiler module

- Memory Profiler module

- Physics Profiler module

- 2D Physics Profiler module

- Rendering Profiler module

- UI and UI Details Profiler

- Video Profiler module

- Virtual Texturing Profiler module

- Customizing the Profiler

- Low-level native plug-in Profiler API

- Profiling tools

- Log files

- Understanding optimization in Unity

- Asset loading metrics

- Installing Unity

- Asset Workflow

- Input

- 2D game development

- Introduction to 2D

- 2D game development quickstart guide

- 2D Sorting

- Work with sprites

- Import images as sprites

- Sort sprites

- Sprite Renderer

- Sprite Creator

- Sprite Editor

- Sorting Groups

- 9-slicing Sprites

- Sprite Masks

- Sprite Atlas

- Sprite Shape Renderer

- Tilemaps

- Physics Reference 2D

- Graphics

- Render pipelines

- Render pipelines introduction

- # Render pipeline feature comparison

- How to get, set, and configure the active render pipeline

- Choosing and configuring a render pipeline and lighting solution

- Using the Built-in Render Pipeline

- Using the Universal Render Pipeline

- Using the High Definition Render Pipeline

- Scriptable Render Pipeline fundamentals

- Creating a custom render pipeline

- Cameras

- Lighting

- Introduction to lighting

- Light sources

- Shadows

- The Lighting window

- Lighting Settings Asset

- The Light Explorer window

- Lightmapping

- Realtime Global Illumination using Enlighten

- Light Probes

- Reflection Probes

- Precomputed lighting data

- Scene View Draw Modes for lighting

- Models

- Meshes

- Textures

- Importing Textures

- Texture formats

- Mipmaps

- Render Texture

- Custom Render Textures

- Movie Textures

- 3D textures

- Texture arrays

- Cubemaps

- Cubemap arrays

- Streaming Virtual Texturing

- Streaming Virtual Texturing requirements and compatibility

- How Streaming Virtual Texturing works

- Enabling Streaming Virtual Texturing in your project

- Using Streaming Virtual Texturing in Shader Graph

- Cache Management for Virtual Texturing

- Marking textures as "Virtual Texturing Only"

- Virtual Texturing error material

- Sparse Textures

- Loading texture and mesh data

- Shaders

- Shaders core concepts

- Built-in shaders

- Standard Shader

- Standard Particle Shaders

- Autodesk Interactive shader

- Legacy Shaders

- Using Shader Graph

- Writing shaders

- Writing shaders overview

- ShaderLab

- ShaderLab: defining a Shader object

- ShaderLab: defining a SubShader

- ShaderLab: defining a Pass

- ShaderLab: adding shader programs

- ShaderLab: specifying package requirements

- ShaderLab: commands

- ShaderLab: grouping commands with the Category block

- ShaderLab command: AlphaToMask

- ShaderLab command: Blend

- ShaderLab command: BlendOp

- ShaderLab command: ColorMask

- ShaderLab command: Conservative

- ShaderLab command: Cull

- ShaderLab command: Offset

- ShaderLab command: Stencil

- ShaderLab command: UsePass

- ShaderLab command: GrabPass

- ShaderLab command: ZClip

- ShaderLab command: ZTest

- ShaderLab command: ZWrite

- ShaderLab legacy functionality

- HLSL in Unity

- Preprocessor directives in HLSL

- Shader semantics

- Accessing shader properties in Cg/HLSL

- Providing vertex data to vertex programs

- Built-in shader include files

- Built-in macros

- Built-in shader helper functions

- Built-in shader variables

- HLSL data types

- Use 16-bit precision in shaders

- Using sampler states

- GLSL in Unity

- Example shaders

- Writing Surface Shaders

- Writing shaders for different graphics APIs

- Understanding shader performance

- Materials

- Visual effects

- Post-processing and full-screen effects

- Particle systems

- Choosing your particle system solution

- Built-in Particle System

- Using the Built-in Particle System

- Particle System vertex streams and Standard Shader support

- Particle System GPU Instancing

- Particle System C# Job System integration

- Components and Modules

- Particle System

- Particle System modules

- Main module

- Emission module

- Shape module

- Velocity over Lifetime module

- Noise module

- Limit Velocity over Lifetime module

- Inherit Velocity module

- Lifetime by Emitter Speed module

- Force over Lifetime module

- Color over Lifetime module

- Color by Speed module

- Size over Lifetime module

- Size by Speed module

- Rotation over Lifetime module

- Rotation by Speed module

- External Forces module

- Collision module

- Triggers module

- Sub Emitters module

- Texture Sheet Animation module

- Lights module

- Trails module

- Custom Data module

- Renderer module

- Particle System Force Field

- Visual Effect Graph

- Decals and projectors

- Lens flares and halos

- Lines, trails, and billboards

- Sky

- Color

- Graphics performance and profiling

- Render pipelines

- World building

- Physics

- Built-in 3D Physics

- Character control

- Rigidbody physics

- Collision

- Joints

- Articulations

- Ragdoll physics

- Cloth

- Multi-scene physics

- Built-in 3D Physics

- Scripting

- Setting Up Your Scripting Environment

- Scripting concepts

- Important Classes

- Unity architecture

- Plug-ins

- Job system

- UnityWebRequest

- Multiplayer

- Audio

- Audio overview

- Audio files

- Tracker Modules

- Audio Mixer

- Native audio plug-in SDK

- Audio Profiler

- Ambisonic Audio

- Audio Reference

- Audio Clip

- Audio Listener

- Audio Source

- Audio Mixer

- Audio Filters

- Audio Effects

- Audio Low Pass Effect

- Audio High Pass Effect

- Audio Echo Effect

- Audio Flange Effect

- Audio Distortion Effect

- Audio Normalize Effect

- Audio Parametric Equalizer Effect

- Audio Pitch Shifter Effect

- Audio Chorus Effect

- Audio Compressor Effect

- Audio SFX Reverb Effect

- Audio Low Pass Simple Effect

- Audio High Pass Simple Effect

- Reverb Zones

- Microphone

- Audio Settings

- Video and cutscenes

- Animation

- Animation system overview

- Rotation in animations

- Animation Clips

- Animator Controllers

- Retargeting of Humanoid animations

- Performance and optimization

- Animation Reference

- Animation FAQ

- Playables API

- A Glossary of animation terms

- Legacy Animation system

- User interface (UI)

- Comparison of UI systems in Unity

- UI Toolkit

- Get started with UI Toolkit

- UI Builder

- Structure UI

- The visual tree

- Structure UI with UXML

- Structure UI with C# scripts

- Custom controls

- Best practices for managing elements

- Encapsulate UXML documents with logic

- UXML elements reference

- UXML element BindableElement

- UXML element VisualElement

- UXML element BoundsField

- UXML element BoundsIntField

- UXML element Box

- UXML element Button

- UXML element ColorField

- UXML element CurveField

- UXML element DoubleField

- UXML element DropdownField

- UXML element EnumField

- UXML element EnumFlagsField

- UXML element FloatField

- UXML element Foldout

- UXML element GradientField

- UXML element GroupBox

- UXML element Hash128Field

- UXML element HelpBox

- UXML element IMGUIContainer

- UXML element Image

- UXML element InspectorElement

- UXML element IntegerField

- UXML element Label

- UXML element LayerField

- UXML element LayerMaskField

- UXML element LongField

- UXML element ListView

- UXML element MaskField

- UXML element MinMaxSlider

- UXML element ObjectField

- UXML element PopupWindow

- UXML element ProgressBar

- UXML element PropertyField

- UXML element RadioButton

- UXML element RadioButtonGroup

- UXML element RectField

- UXML element RectIntField

- UXML element RepeatButton

- UXML element ScrollView

- UXML element Scroller

- UXML element Slider

- UXML element SliderInt

- UXML element TagField

- UXML element TextElement

- UXML element TextField

- UXML element TemplateContainer

- UXML element Toggle

- UXML element Toolbar

- UXML element ToolbarBreadcrumbs

- UXML element ToolbarButton

- UXML element ToolbarMenu

- UXML element ToolbarPopupSearchField

- UXML element ToolbarSearchField

- UXML element ToolbarSpacer

- UXML element ToolbarToggle

- UXML element TwoPaneSplitView

- UXML element Vector2Field

- UXML element Vector2IntField

- UXML element Vector3Field

- UXML element Vector3IntField

- UXML element Vector4Field

- Structure UI examples

- Create a complex list view

- Create a list view runtime UI

- Wrap content inside a scroll view

- Create a tabbed menu for runtime

- Create a pop-up window

- Use Toggle to create a conditional UI

- Create a custom control with two attributes

- Create a slide toggle custom control

- Use Mesh API to create a radial progress indicator

- Create a bindable custom control

- Create a custom style for a custom control

- Style UI

- UI Toolkit Debugger

- Control behavior with events

- Support for Editor UI

- Create a custom Editor window

- Create a Custom Inspector

- SerializedObject data binding

- Bindable elements reference

- Bindable data types and fields

- Binding system implementation details

- Binding examples

- Bind with binding path in C# script

- Bind without the binding path

- Bind with UXML and C# script

- Create a binding with the Inspector

- Bind to nested properties

- Bind to a UXML template

- Receive callbacks when a bound property changes

- Receive callbacks when any bound properties change

- Bind to a list with ListView

- Bind to a list without ListView

- Bind a custom control

- Bind a custom control to custom data type

- View data persistence

- Support for runtime UI

- Work with text

- Examples

- Migration guides

- Unity UI

- Immediate Mode GUI (IMGUI)

- Navigation and Pathfinding

- Navigation Overview

- Navigation System in Unity

- Inner Workings of the Navigation System

- Building a NavMesh

- NavMesh building components

- Advanced NavMesh Bake Settings

- Creating a NavMesh Agent

- Creating a NavMesh Obstacle

- Creating an OffMesh Link

- Building OffMesh Links Automatically

- Building Height Mesh for Accurate Character Placement

- Navigation Areas and Costs

- Loading Multiple NavMeshes using Additive Loading

- Using NavMesh Agent with Other Components

- Navigation Reference

- Navigation How-Tos

- Navigation Overview

- Unity Services

- Setting up your project for Unity services

- Unity Organizations

- Unity Ads

- Unity Analytics

- Unity Cloud Content Delivery

- Unity Build Automation (formerly Cloud Build)

- Unity IAP

- Setting up Unity IAP

- Cross Platform Guide

- Codeless IAP

- Defining products

- Subscription Product support

- Initialization

- Browsing Product Metadata

- Initiating Purchases

- Processing Purchases

- Handling purchase failures

- Restoring Transactions

- Purchase Receipts

- Receipt validation

- Store Extensions

- Cross-store installation issues with Android in-app purchase stores

- Store Guides

- Implementing a Store

- Unity Cloud Diagnostics

- Unity Integrations

- Multiplayer Services

- Unity Distribution Portal

- Unity Accelerator

- Unity's Asset Store

- Asset Store packages

- Publishing to the Asset Store

- Creating your Publisher Account

- Creating a new package draft

- Deleting a package draft

- Uploading assets to your package

- Filling in the package details

- Submitting your package for approval

- Viewing the status of your Asset Store submissions

- Collecting revenue

- Providing support to your customers

- Adding tags to published packages

- Connecting your account to Google Analytics

- Promoting your Assets

- Refunding your customers

- Upgrading packages

- Deprecating your Assets

- Issuing vouchers

- Managing your publishing team

- Asset Store Publisher portal

- Platform development

- Cross-platform features and considerations

- Android

- Introducing Android

- Getting started with Android

- Developing for Android

- Android mobile scripting

- Input for Android devices

- Android application size restrictions

- Graphics for Android

- Testing and debugging

- Create and use plug-ins in Android

- Integrating Unity into Android applications

- Deep linking on Android

- Android thread configuration

- Device features and permissions

- Handle Android crashes

- Quit a Unity Android application

- Building and delivering for Android

- Chrome OS

- Dedicated Server

- Embedded systems

- iOS

- Introducing iOS

- Getting started with iOS

- Developing for iOS

- Building and delivering for iOS

- Linux

- macOS

- tvOS

- Universal Windows Platform

- Introduction to Universal Windows Platform

- Get started with Universal Windows Platform

- Develop for Universal Windows Platform

- Build and deliver for Universal Windows Platform

- WebGL

- WebGL introduction

- WebGL development

- WebGL Player settings

- Interaction with browser scripting

- Code examples: Call JavaScript and C/C++/C# functions in Unity

- Set up your JavaScript plug-in

- Call JavaScript functions from Unity C# scripts

- Call Unity C# script functions from JavaScript

- Call C/C++/C# functions from Unity C# scripts

- Compile a static library as a Unity plug-in

- Create callbacks between Unity C#, JavaScript, and C/C++/C# code

- Replace deprecated browser interaction code

- WebGL native plug-ins for Emscripten

- Memory in Unity WebGL

- WebGL graphics

- Audio in WebGL

- Texture compression in WebGL

- Embedded Resources on WebGL

- Input in WebGL

- Configure a WebGL Canvas size

- WebGL networking

- WebGL performance considerations

- Debug and troubleshoot WebGL builds

- Building and distributing a WebGL project

- Windows

- XR

- Unity Search

- Glossary

- Platform development

- XR

- Overview

- AR development in Unity

AR development in Unity

Get started with augmented reality development in Unity.

Augmented Reality (AR) involves a different set of design challenges compared to VRVirtual Reality More info

See in Glossary or traditional real-time 3D applications. An augmented reality app overlays its content on the real world around the user. AR devices, such as glasses, visors, or mobile devices, use transparent displays to allow the user to see the real-world with virtual content overlaid on top.

To place an object in the real world, you must first determine where to place it. For example, you might want to place a virtual painting on a physical wall. If you place a virtual potted plant, you might want it on a physical table or the floor. An AR app receives information about the world from the user’s device, and decides how to use this information to create a good experience for the user. Depending on target device capabilities, this information includes location of planar surfaces (planes), and the detection of objects, people, and faces.

The following sections provide an overview of an AR scene, the AR packages, and AR Template you can use to develop AR applications in Unity.

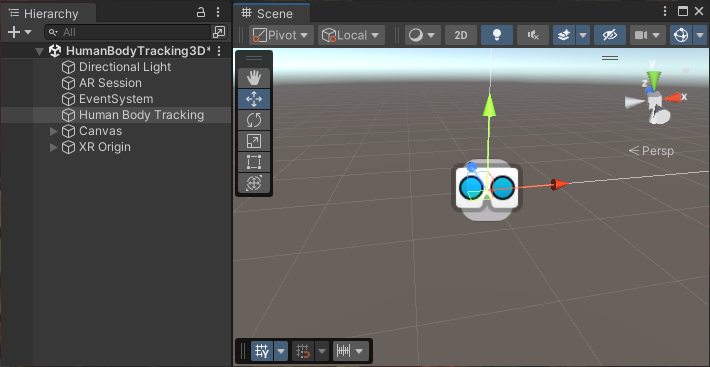

Introduction to an AR scene

When you open a typical AR sceneA Scene contains the environments and menus of your game. Think of each unique Scene file as a unique level. In each Scene, you place your environments, obstacles, and decorations, essentially designing and building your game in pieces. More info

See in Glossary in Unity, you will not find many 3D objectsA 3D GameObject such as a cube, terrain or ragdoll. More info

See in Glossary in the Scene or the Hierarchy view. Instead, most GameObjectsThe fundamental object in Unity scenes, which can represent characters, props, scenery, cameras, waypoints, and more. A GameObject’s functionality is defined by the Components attached to it. More info

See in Glossary in the scene define the settings and logic of the app. 3D content is typically created as prefabsAn asset type that allows you to store a GameObject complete with components and properties. The prefab acts as a template from which you can create new object instances in the scene. More info

See in Glossary that are added to the scene at runtime in response to AR-related events.

A typical AR scene in the Unity Editor.

AR scene elements

The following sections outline the Required scene elements to make AR work, and the Optional scene elements you can add to enable specific AR features. To learn more about how to configure an XRAn umbrella term encompassing Virtual Reality (VR), Augmented Reality (AR) and Mixed Reality (MR) applications. Devices supporting these forms of interactive applications can be referred to as XR devices. More info

See in Glossary scene Set up an XR scene.

Note: AR GameObjects appear in the create menu when you enable an AR provider plug-in in XR Plug-inA set of code created outside of Unity that creates functionality in Unity. There are two kinds of plug-ins you can use in Unity: Managed plug-ins (managed .NET assemblies created with tools like Visual Studio) and Native plug-ins (platform-specific native code libraries). More info

See in Glossary Management.

Required scene elements

An AR scene must contain the AR Session and XR Origin GameObjects. The available options for the XR Origin depends on the packages you’ve installed in your project. AR Foundation uses the Mobile AR and XR Interaction Toolkit uses the AR variant.

If you start your project from an AR template, these components will come configured in the scene.

To manually add the relevant XR Origin and AR Session GameObjects:

- Right-click in the Hierarchy window.

- Go to GameObject > XR.

- Select the relevant component.

Note: You must only have one active XR Origin in a scene.

AR Session

The AR Session GameObject contains the components you need to control the lifecycle and input of AR experience:

XR Origin (Mobile AR)

The XR Origin (Mobile AR) GameObject is a variant of the XR Origin for hand-held AR applications. This variant isn’t configured for controller input by default. This version of the XR Origin is used by AR Foundation.

The XR Origin (Mobile AR) GameObject consists of the following components:

- XR Origin component

-

CameraA component which creates an image of a particular viewpoint in your scene. The output is either drawn to the screen or captured as a texture. More info

See in Glossary Offset GameObject:

For more information, refer to AR Foundation Set up your scene and Device tracking.

XR Origin (AR)

The XR Origin (AR) GameObject is a variant of the XR Origin for hand-held AR applications and comes configured for controller input. The XR Interaction Toolkit package provides this variant.

The XR Origin (AR) GameObject consists of the following:

- XR Origin component

- Camera Offset GameObject:

- LeftHand/RightHand Controller GameObjects:

Refer to Create a basic scene to learn more about scene configuration with XR Interaction Toolkit.

Optional scene elements

To enable an AR feature, you must add the corresponding components to your project. Typically, this includes an AR Manager, but other features might also require additional components.

To learn more about the required components for AR Foundation features, refer to the documentation for the relevant AR Foundation Feature. For more in-depth information about setting up your AR Foundation app, visit Scene set up.

AR packages

To build AR apps in Unity, you can install the AR Foundation package along with the XR provider plug-ins for the devices you want to support.

To develop AR/MRMixed Reality

See in Glossary apps for the Apple Vision Pro device, you also need the PolySpatial visionOS packages. Unity provides additional packages, including the XR Interaction Toolkit to make it easier and faster to develop AR experiences.

AR provider plug-ins

AR platforms are available as provider plug-ins by the XR Plug-in Management system. To understand how to use the XR Plug-in Management system to add and enable provider plug-ins for your target platforms, refer to XR Project set up.

The AR provider plug-ins supported by Unity include:

| Plug-in | Supported devices |

|---|---|

| Apple ARKit XR Plug-in | iOSApple’s mobile operating system. More info See in Glossary |

| Apple visionOS XR Plug-in | visionOS |

| Google ARCore XR Plug-in | Android |

| OpenXR Plug-in | Devices with an OpenXR runtime |

Note: Depending on the platform or device, you might need to install additional packages to along with OpenXR. For example, to build an AR app for HoloLens 2, you must install the Microsoft’s Mixed Reality OpenXR Plugin.

AR Foundation

The AR Foundation package supports AR development in Unity.

AR Foundation enables you to create multi-platform AR apps with Unity. In an AR Foundation project, you choose which AR features to enable by adding the corresponding manager components to your scene. When you build and run your app on an AR device, AR Foundation enables these features using the platform’s native AR SDK, so you can create once and deploy to the world’s leading AR platforms.

A device can be AR-capable without supporting all possible AR features. Available functionality depends on both the device platform and the capabilities of the specific device. Even on the same platform, capabilities can vary from device to device. For example, a specific device model might support AR through its world-facing camera, but not its user-facing camera. To learn which platforms support each AR Foundation feature, refer to the Platform support table in the AR Foundation documentation.

PolySpatial visionOS packages

Augmented and mixed reality development for the Apple Vision Pro device relies on a set of packages that implement the Unity PolySpatial architecture on the visionOS platform.

The PolySpatial architecture splits a Unity game or app into two logical pieces: a simulation controller and a presentation view. The simulation controller drives all app-specific logic, such as MonoBehaviours and other scripting, user interface behavior, asset management, physics, etc. Almost all of your game’s behavior is part of the simulation. The presentation view handles both input and output, which includes rendering to the display and other forms of output, such as audio. The view sends input received from the operating system – including pinch gestures and head position – to the simulation for processing each frame. After each simulation step, the view updates the display by rendering pixelsThe smallest unit in a computer image. Pixel size depends on your screen resolution. Pixel lighting is calculated at every screen pixel. More info

See in Glossary to the screen, submitting audio buffers to the system, etc.

On the visionOS platform, the simulation part runs in a Unity Player, while the presentation view is rendered by Apple’s RealityKit. For every visible object in the simulation, a corresponding object exists in the RealityKit scene graph.

Note: PolySpatial is only used for augmented and mixed realityMixed Reality (MR) combines its own virtual environment with the user’s real-world environment and allows them to interact with each other.

See in Glossary on the Apple Vision Pro. Virtual realityVirtual Reality (VR) immerses users in an artificial 3D world of realistic images and sounds, using a headset and motion tracking. More info

See in Glossary and windowed apps run in a Unity Player that also controls rendering (using the Apple Metal graphics API).

XR Interaction Toolkit

The Unity XR Interaction Toolkit provides tools for building both AR and VR interactions. The AR functionality provided by the XR Interaction Toolkit includes:

- AR gesture system to map screen touches to gesture events

- AR placement Interactable component to help place virtual objects in the real world

- AR gesture Interactor and Interactable components to support object manipulations such as place, select, translate, rotate, and scale

- AR annotations to inform users about AR objects placed in the real world

For more information about the template assets and sample scene, refer to the AR Mobile template documentation.

Additional resources

Did you find this page useful? Please give it a rating:

Thanks for rating this page!

What kind of problem would you like to report?

Thanks for letting us know! This page has been marked for review based on your feedback.

If you have time, you can provide more information to help us fix the problem faster.

Provide more information

You've told us this page needs code samples. If you'd like to help us further, you could provide a code sample, or tell us about what kind of code sample you'd like to see:

You've told us there are code samples on this page which don't work. If you know how to fix it, or have something better we could use instead, please let us know:

You've told us there is information missing from this page. Please tell us more about what's missing:

You've told us there is incorrect information on this page. If you know what we should change to make it correct, please tell us:

You've told us this page has unclear or confusing information. Please tell us more about what you found unclear or confusing, or let us know how we could make it clearer:

You've told us there is a spelling or grammar error on this page. Please tell us what's wrong:

You've told us this page has a problem. Please tell us more about what's wrong:

Thank you for helping to make the Unity documentation better!

Your feedback has been submitted as a ticket for our documentation team to review.

We are not able to reply to every ticket submitted.